Composed Controls Considered Harmful?

Back in 2006 I was very interested in speeding up Linux networking. I was working at a VoIP provider that was majorly underfunded because of the regulatory uncertainty around VoIP at the time. The bad part of the situation was that we didn’t have money to buy the shiny VoIP equipment from the vendors. The good part was that I got to write a lot of software that had to be as fault tolerant as any human could make it. It also meant that we ran everything on either Linux or FreeBSD and so I worked hard to squeeze all of the available performance out of those platforms. I was also particularly interested in Linux networking because I had spent a lot of effort up to that time on crazy things in High Performance computing on Beowulf clusters.

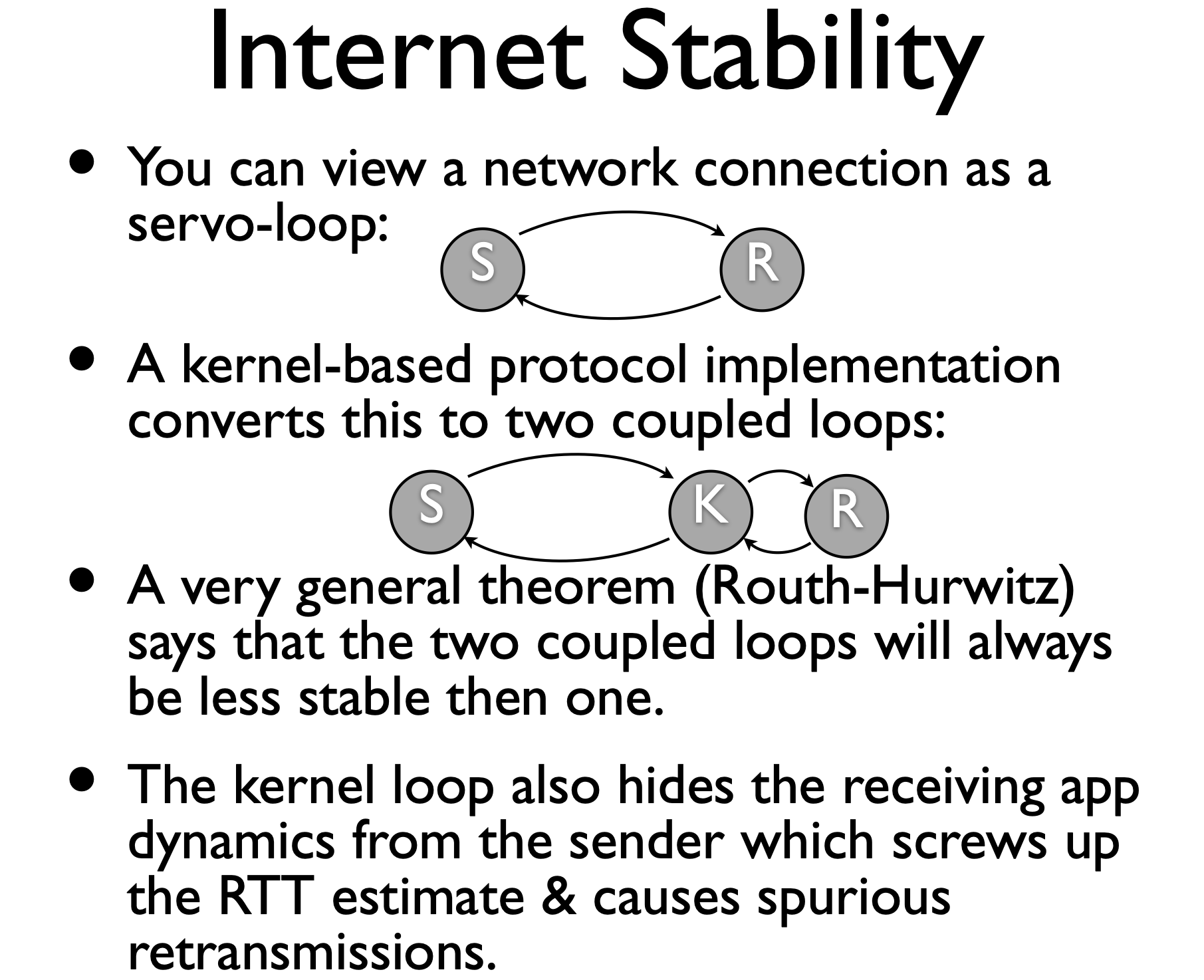

I also used to read LWN, so I was really excited when this popped up: Van Jacobson’s network channels. The channels themselves were neat, and worthy of a talking about in the context of today’s IOVisor and the like, but what I really got excited about in his slides was this, on page 9:

I thought this was a very novel way of thinking about composing systems. At the time I was still a physics-person becoming a computing-person, but I had studied some control theory during my days building particle accelerators. Still, I had no idea what this meant: it just seemed cool. In what follows, I will explain how I understand what he meant. Note that I don’t know him and I’ve never spoken with him about this slide, so this is all in some sense a straw man. Still it’s interesting and there is something to say about why this might just be true.

I’m also quite sure that whatever fraction of the audience were actual control engineers were shaking their heads vigorously in disagreement if not choking on their own tongues. The statement that

A very general theorem (Routh-Hurwitz) says that two coupled loops will always be less stable than one.

is not only mathematically imprecise, but you’d be hard pressed to find a control systems engineer who would agree that is what the theorem states. Controls engineers as a rule aren’t afraid of systems with multiple interacting control loops. In fact they wouldn’t even be able to do their jobs if they were. Our world is full of many billions of wonderfully stable interacting controls. So what was he getting at? His statement might be common knowledge in some camps, but I can tell you that every control systems person I’ve ever discussed this with was unfamiliar with this characterization of the theorem. At any rate, let’s get back to why this intrigued me.

First off it’s important to know that “Routh-Hurwitz” can mean one two likely things in this context:

The Routh-Hurwitz Theorem; and the related Routh-Hurwitz

criterion. Expecting to find find a theorem about the stability of two-loop systems I

excitedly started looking. Eventually I found myself staring at Gradshteyn and Ryzhik (does

anyone even have these anymore?) §15.715. The Routh-Hurwitz theorem establishes a criterion by

which all of the eigenvalues of a matrix

have negatitve real parts if a specific sequence of values

are all be positive. The

Before getting back to the coefficients, let me cover why would we care about this in the context of

control systems. A fundamental result of control theory is that the time-domain response of a system

where the

So in the interest of system stability we care about the real parts of the poles of

This takes us back to the Routh-Hurwitz theorem, which when applied to the characteristic equation of a system gives us the Routh-Hurwitz criterion, which is taught to everyone who studies control theory even though the technique was invented in the ninteenth century. Matlab has been calculating the poles of transfer functions as long as it’s been around.

At any rate, let’s get back to Jacobson’s characterization of the Routh-Hurwitz theorem. The

characteristic equation is a polynomial in

Systems in nature and the systems we usually engineer are typically second order. The fascinating discipline of Mechanical-Electrial Analogies [Olson] provides a formalism under which electrical, mechanical, hydraulic, and acoustical systems can all be reduced to a circuit analysis using Kerchoff’s laws. These systems are all second-order.

I offer that most if not all of the modular blocks of our computing and networking systems are second order. I have two reasons to say this. First, our systems ring (i.e. display damped oscillations) in response to step impulses. Second, if you look at the above materials for the Mechanical-Electrial analogies, or any elementary textbook in controls theory, you will see that the essential properties of a second-order system are damping, energy storage and inertia. If you think of our server systems, or routers, or other of our modular blocks as queuing systems, you can immediately see the damping (tasks leave the queue eventually), and the energy storage (the queue itself).

To see the inertial aspect, think of water hammer in a house with old plumbing. When you turn on the water to stake a shower, then quickly shut off the valve, you sometimes hear a lound banging sound a few second later. This is because the energy that went into compressing the water and the plumbing sloshes around for a bit as a result of the boundary condition of zero flow. Now think of our systems. They clearly slosh around as well. Think of any overhead cost that lags the task: garbage collection, pipelined cache misses, control plane processing. Thus I argue that since our systems are inertial, exhibit energy storage via queueing, and they dampen, so by dynamical analogy they are second-order systems.

For Jacobson’s (supposed) argument it doesn’t really matter if we consider two composed second-order systems or four composed first-order systems. But let’s consider it settled for now that we have a second-order system of interest, say a server on the internet processing credit card transactions. Say we want to check the stability of our system with Routh-Hurwitz. The system will have a characteristic equation

The associated sequence for the Routh-Hurwitz theorem is

and so we see that so long as all of the

where the

At

Control engineering is the discipline of optimizing the choices of system parameters. These

parameters determine the

We all know that our systems behave this way. This brings to mind a great paper from Boyd-Wickizer et. al. in 2012, where the authors modeled the Linux kernel ticket locks via Markov processes and demonstrate bizarre and sudden system performance collapse. Physicists might be tempted to call these kind of things phase transitions. While Boyd-Wickizer et. al. weren’t studying coupled controls, this is the kind of system behavior that I’m sure Jacobson had in mind in his slide.