A Different View on the Coupon Collector Problem

Once during a meeting in which we were discussing the Fischer-Yates shuffle someone asked:

How many times do we need to run the algorithm to see every permutation?

The simplicity of this question belies the subtlety of its answer. In fact, you can’t actually answer the question as posed. Because Fischer-Yates is a random algorithm, there is no certain answer. For the sake pedagogy, I proposed to answer another question, more suggestive of the probabilistic nature of the original:

What’s the average number of times I need to flip a coin in order to see both sides?

Enter The Coupon Collector Problem

A classic problem in combinatorics called the Coupon Collector Problem(CCP) captures the setting and solution for tackling both the original question and the one I posed. A coupon here is anything of which there are

What is the average number of coupons that need to be collected in order to get a full set?

We assign an arbitrary order and index

The last line above defines the

Flipping a coin to see both sides

So, let’s get back to the coin. We can model the situation as a set of experiments where we collect successive flips. These experiments generate sequences in which the

It’s interesting that the sequence of length

On the second step, we’ve inserted the series representation for

We start the sum for

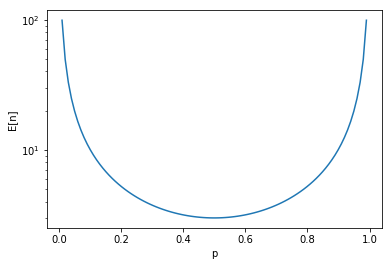

For

This is maybe a way of estimating if a coin is extremely unfair: build an estimator based on the number flips it takes to see both sides.

Fine for coins, but what about the coupons?

Having solved the problem for

- Naive formulation: all equal probabilities, i.e.

- Singularly hard to get: One distinctive

- Anything goes: arbitrary

The first is a by far the easiest analyze, and will carry us through to the thing I want to show in this article. For the more general cases (and a less casual approach), see Shank and Yang.

So, the most naive approach to the most naive formulation of the problem is brute-force counting. However, once we started to brute-force count for

Since I’m lazy, I tired of brute force counting the sequences at

The coin redux, this time as a PGF

I’m not going to do justice to generating functions in this space. I recommend Knuth et. al. as a gentle introduction and Wilf if you’re into that kind of thing. But I will give the basic ideas. Imagine that you have a function

Suppose you also happen to have a recurrence relation for

If this is new to you, I’ll say that it really helps to start with simple recursions and work your way up. But, we’re not going to do any of that here. Instead, we are going to take some liberties and just use the machinery. But I am going to go through the calculations in (maybe excessive) detail to help allay some of the fear that people seem to have of GFs.

The first thing we are going to do is realize that any series sum that involves powers of things can be “converted” to a GF by taking some liberties and making some suitable replacements. Why would we want to do this? Well, it all depends on how you choose to interpret the coefficients. In particular, if you interpret the

Notice a couple of things here: First, that

for everything that we consider to be a probability. Also, notice that

Now this is useful to lazy amateur combinatorialist. Brute-force counting sequences to calculate

where

This saves us from having to screw around with partial fractions. Now, let’s use all of this on an unsuspecting sum to see if it works. In light of thinking about PGFs, our original

If you look back at our earlier calculation of

The coin again, this time as a finite automaton

We return now to the hint that Knuth et. al. gave us about using finite automata to help generate

I’ve labeled the states by number as well. We start in a state

Here we have identified

This form should be familiar enough now that I don’t have to convince you that this approach worked.

Coupons with Equal Probabilities

We are now prepared to take on the CCP for arbitrary

Here the states are labeled by the number of distinct coupons we’ve seen. The symbol

Here

This leads us to conclude that

As before, we make the substitution

Also, as before, we make the substitution

Then

Things are looking very promising. Now we evaluate

Interestingly, this means that

By now, we are tired and we resort to some street fighting, Wolfram Alpha-style, which gives us

using the fact that

using

Now to answer the original question for the equally probable Coupons. In general we can get a one-sided confidence interval for

Setting

For a permutation of order

Finally, for large

In my weird and twisted way, this is a much more satisfying approach to answer the original question. I’ve always been interested to see how we can apply this to arbitrary finite automata. For instance, I’m curious to use this technique on the KMP Algorithm. I’m a fan of David Eppstein’s Notes on algorithms from long ago in internet time, particularly where he shows a state machine for KMP:

Conclusion

Even though I don’t like the canonical explanation, the Wikipedia page on CCP has many useful links. One my favorites in particular is by Flajolet et. al.. That’s a truly systematic approach to the problem, and shows interesting connections to other allocation problems.

Dan Jacob Wallace takes different approach that I enjoyed reading.